Do not fear, I am with you to beat the Topic Modeling monster! July 20, 2017 - Blogs on Text Analytics

As more data becomes available, it gets harder to find and discover what to look for! As we discussed in our previous post topic modeling is a powerful unsupervised machine learning technique to discover hidden themes in a collection of documents. Topic modeling has achieved popularity in different disciplines because it offers several meaningful advantages for different applications. These include document clustering or classification, information retrieval, summarization, and of course topic identification. In a series of posts, we will review some general hints to help you get the most out of the topic model.

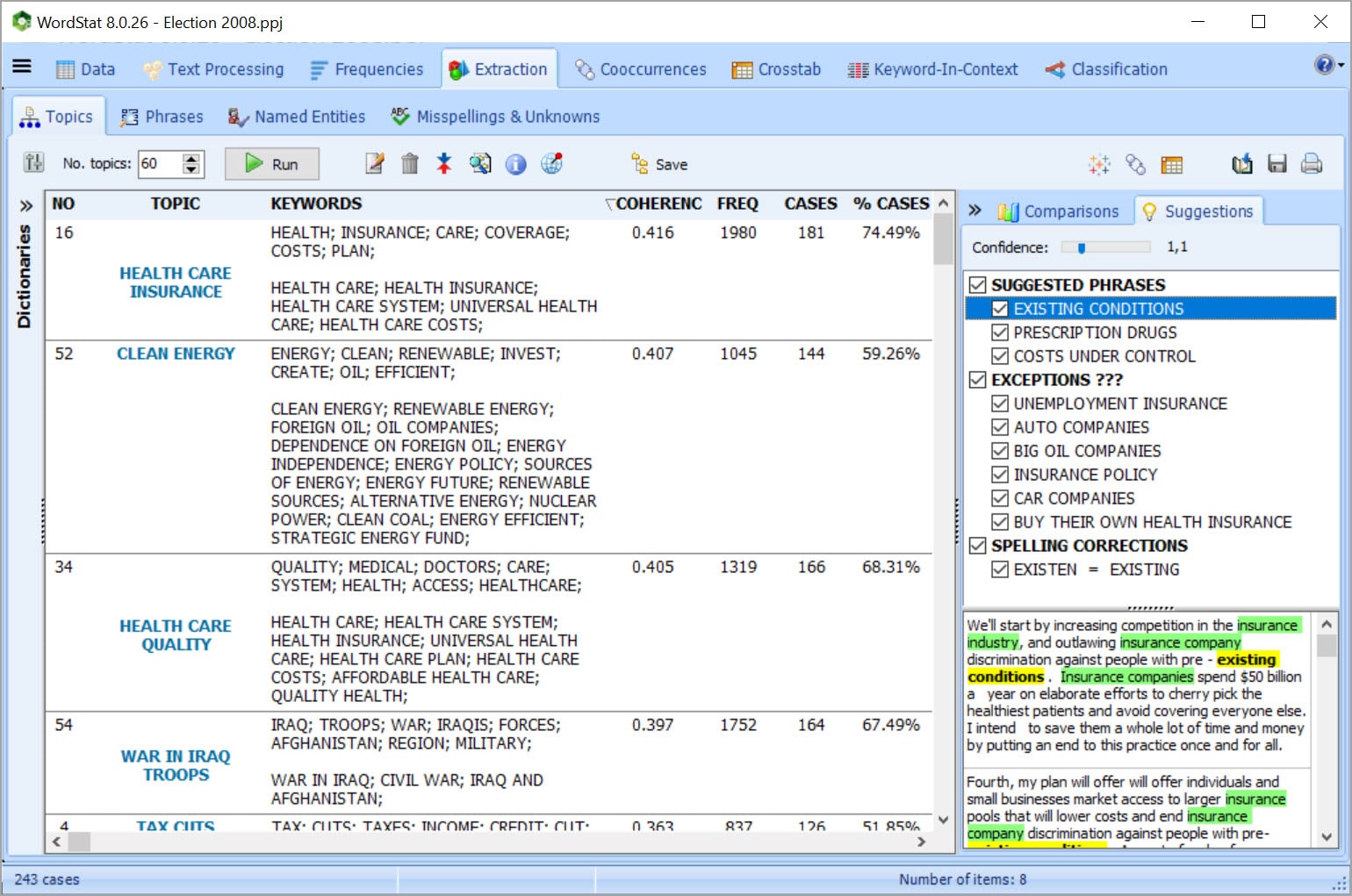

If you have a document collection and you have roughly estimated the number of topics you expect to see, then you are almost set to start the topic modeling process. The number of topics is a fixed parameter in topic modeling and should be given to the model. Based on the topic modeling technique you are using (there are many), there might be other parameters to set or fine-tune. For example, in WordStat you are able to set the loading parameter. But, let’s simplify the problem a bit and just focus on the number of topics. The appropriate number of topics is highly dependent on the data. However, there are some rules of thumb that should help you estimate the number of topics faster. If you have less than 500 cases, of an average length of ~50-to-500 words, normally you may expect to have up to 10 topics. For 500-to-10,000 cases the number of topics may range from 10-to-50 and you may try 50-to-200 topics for 10,000-to-100,000 cases. As mentioned, it is not an exact solution as we made some assumptions here such as fixing the length of the cases, which is another variable. A collection of 100 large cases is expected to have more topics than a collection of 100 smaller cases. But, the main point here is that you need to consider a starting guess as to the number of topics in your corpus-based on your knowledge of the data. Having thought about that number, then you may try a different number of topics in the range to make it narrower and more accurate, or even to find the best bet for the number of topics.

Now suppose you have 2,000 cases of length of ~200 words each. From the educated guess, based on your knowledge of the data, you expect to have between 10-to-50 topics. For simplicity, imagine you have done two topic models with 10 and 30 topics and you get some results as follow:

- Keywords in topic-3 in the model with 10 topics = {troop, iraq, al, qaeda, war, afghanistan, end, military}

- And for the model with 30 topics you don’t have exactly the above topic but you have two topics such as:

- Topic-17 = {iraq, troop, war, military}

- Topic-29 = {al, qaeda, afghanistan}

Alright! The message here from your corpus is that the model with 30 topics is providing you with more specific topics. It again depends on the objective of the analysis. If you would like to have more general categories then the lower number of topics fits better here and if you want to be more specific then it would be better to try to find more, and more defined, topics in the range. No pain no gain! The more time you spend tuning the parameters the more refined your results.

All good, but how are these topics generated!? I’ll give you an example. Imagine you have a bunch of documents. In the documents, you have various sets of words co-occurring in the proximity of each other, an example would be: {health, care, system, …}, {health, insurance, companies, …}, {health, care, patient, doctor, …}, and {prescription, drug, medicare, doctor, …}. Because of these beautiful sets, you may expect to have a “health care” topic, which may be something like this: {health, care, insurance, medicare, drug, doctor, patient}. That means, topic models dig into the pool of words in your corpus and learn patterns of words that are very likely to occur within documents.

Okay, this gives us a nice hint! Words with higher frequency are more likely to appear in the results! So, it doesn’t really matter if we remove the very low-frequency terms because they are weak features of the corpus! What is the best threshold for removing low-frequency words? Check out our next post 😊